编译FFMPEG为.so动态链接库

给Android项目添加CMake支持

-

在

build.gradle的defaultconfig下添加-

1 2 3 4

externalNativeBuild { abiFilters "arm64-v8a" } }- 其中可以使用abiFilters进行cpu架构的筛选

-

-

在

build.gradle中的android下添加externalNativeBuild,指定cmake配置文件路径-

1 2 3 4 5 6

externalNativeBuild { cmake { path "CMakeLists.txt" version "3.10.2" } } -

建议如上,配置一个与build.grade同级目录下的CMakeLists.txt,方便后期进行总集成,该文件使用

add_subdirectory进行模块化引入-

1 2 3

cmake_minimum_required(VERSION 3.4.1) ADD_SUBDIRECTORY(src/main/cpp/decoder)

-

-

使用CMake集成.so文件

-

在上述subdirectory下引入.so文件、include文件夹,建立子CMakeLists.txt

-

建立多的使用宏,不建议直接写绝对路径

-

通过

include_directories、add_library、set_target_properties、link_target_libraries添加FFMPEG依赖。通过aux_source_directory添加目标目录下的所有source c/cpp文件。下面是一个例子1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59

cmake_minimum_required(VERSION 3.4.1) #解码依赖的必要静态库及头文件目录,头文件路径也可以方便IDE代码提示 include_directories( "${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/include/${ANDROID_ABI}" ) add_library( avutil SHARED IMPORTED ) set_target_properties( avutil PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libavutil.so ) add_library( swresample SHARED IMPORTED ) set_target_properties( swresample PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libswresample.so ) add_library( avcodec SHARED IMPORTED ) set_target_properties( avcodec PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libavcodec.so ) add_library( avfilter SHARED IMPORTED) set_target_properties( avfilter PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libavfilter.so ) add_library( swscale SHARED IMPORTED) set_target_properties( swscale PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libswscale.so ) add_library( avdevice SHARED IMPORTED) set_target_properties( avdevice PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libavdevice.so ) add_library( avformat SHARED IMPORTED) set_target_properties( avformat PROPERTIES IMPORTED_LOCATION ${PROJECT_SOURCE_DIR}/src/main/cpp/decoder/thirdparty/ffmpeg/libs/${ANDROID_ABI}/libavformat.so ) set(CMAKE_CXX_FLAGS "${CMAKE_CXX_FLAGS} -std=gnu++11 -fexceptions") aux_source_directory(src SRCS) add_library(myffmpeg SHARED ${SRCS}) #log库被依赖,这里要加上; #注意:静态库依赖对顺序有要求,被依赖的放到最后,比如,log是最后被依赖的,放到最后 target_link_libraries(myffmpeg avcodec avdevice avfilter avformat avutil swresample log)

-

编写JNI文件

- Native Java类

- 文件路径:

cn.MetaNetworks.myffmpegtest.codec.FFMpegCodecManager - 定义方法:

decodeAudio

- 文件路径:

1

public native long decodeAudio(String filePath, String dstPath, OnCodecListener listener);

- Native C++类

- 类名一定要和函数名规则匹配

- 与上期内容相对应的函数内容

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

extern "C" JNIEXPORT jlong JNICALL

Java_cn_MetaNetworks_myffmpegtest_codec_FFMpegCodecManager_decodeAudio(JNIEnv *env,

jobject,

jstring filePath,

jstring dstPath,

jobject listener) {

jclass callback = env->GetObjectClass(listener);

const char *outfilename, *filename;

const AVCodec *codec;

AVCodecContext *context = nullptr;

int ret;

FILE *f = nullptr, *outfile = nullptr;

AVPacket *pkt = nullptr;

AVFrame *decoded_frame = nullptr;

// 初始化重采样

struct SwrContext *swr_ctx = nullptr;

AVFormatContext *formatContext;

jmethodID onCodeStart = env->GetMethodID(callback, "onCodecStart","()V");

// 开始筛选出音频index

int audioIndex = -1;

try {

// 开始解码

env->CallVoidMethod(listener, onCodeStart);

// 声明次数

long times = 0;

long total_size=0;

long decoded_size= 0;

int read = 0;

filename = env->GetStringUTFChars(filePath, JNI_FALSE);

outfilename = env->GetStringUTFChars(dstPath, JNI_FALSE);

// 获取音频格式

formatContext = avformat_alloc_context();

if (!formatContext) {

goto end;

}

ret = avformat_open_input(&formatContext, filename, NULL, NULL);

if (ret != 0){

goto end;

}

avformat_find_stream_info(formatContext, nullptr);

if (formatContext->nb_streams <= 0) {

goto end;

}

for (int i = 0; i < formatContext->nb_streams; ++i) {

if (formatContext->streams[i]->codecpar->codec_type == AVMediaType::AVMEDIA_TYPE_AUDIO) {

audioIndex = i;

break;

}

}

if (audioIndex == -1) {

goto end;

}

codec = avcodec_find_decoder(formatContext->streams[audioIndex]->codecpar->codec_id);

pkt = av_packet_alloc();

if (!codec) {

goto end;

}

context = avcodec_alloc_context3(codec);

if (!context) {

goto end;

}

avcodec_parameters_to_context(context, formatContext->streams[0]->codecpar);

/* open it */

if (avcodec_open2(context, codec, NULL) < 0) {

goto end;

}

f = fopen(filename, "rb");

if (!f) {

fprintf(stderr, "Could not open %s\n", filename);

goto end;

}

outfile = fopen(outfilename, "wb");

if (!outfile) {

av_free(context);

goto end;

}

/* set options */

context->channel_layout =

context->channel_layout == 0 ? av_get_default_channel_layout(context->channels)

: context->channel_layout;

swr_ctx = swr_alloc_set_opts(NULL,

AV_CH_LAYOUT_MONO,

AV_SAMPLE_FMT_S16, 16000,

context->channel_layout,

context->sample_fmt, context->sample_rate, 0, NULL

);

if (!swr_ctx) {

goto end;

}

/* initialize the resampling context */

if ((ret = swr_init(swr_ctx)) < 0) {

const char *err_message = av_err2str(ret);

fprintf(stderr, "%s", err_message);

on_error(env,callback,listener,CODEC_ERROR,err_message);

goto end;

}

// 计算文件大小

fseek(f,0L,SEEK_END);

total_size = ftell(f);

// 回到起点

fseek(f,0L,SEEK_SET);

while ((read = av_read_frame(formatContext, pkt)) == 0) {

if (pkt->stream_index == audioIndex) {

if (!decoded_frame) {

if (!(decoded_frame = av_frame_alloc())) {

on_error(env,callback,listener,CODEC_ERROR,"cannot allocate audio frame");

goto end;

}

}

decoded_size += pkt->size;

times ++;

if (pkt->size)

decode(context, pkt, swr_ctx, decoded_frame, outfile);

if (times % 30 == 0){

on_progress(env,callback,listener,int(decoded_size*100.0/total_size));

}

}

}

/* flush the decoder */

pkt->data = NULL;

pkt->size = 0;

decode(context, pkt, swr_ctx, decoded_frame, outfile);

on_success(env,callback,listener,outfilename);

end:

if (outfile != nullptr)

fclose(outfile);

if (f != nullptr)

fclose(f);

if (context != nullptr){

avcodec_free_context(&context);

}

if (decoded_frame != nullptr){

av_frame_free(&decoded_frame);

}

if (pkt != nullptr){

av_packet_free(&pkt);

}

if (swr_ctx != nullptr){

swr_free(&swr_ctx);

}

return 0;

} catch (std::exception& e) {

on_error(env,callback,listener,CODEC_ERROR,"codec is not supported. exiting");

if (outfile != nullptr)

fclose(outfile);

if (f != nullptr)

fclose(f);

if (context != nullptr){

avcodec_free_context(&context);

}

if (decoded_frame != nullptr){

av_frame_free(&decoded_frame);

}

if (pkt != nullptr){

av_packet_free(&pkt);

}

if (swr_ctx != nullptr){

swr_free(&swr_ctx);

}

return -1;

}

}

其中:

Java调用C++函数

- 直接调用Java对应的native方法即可

C++调用Java类函数

通过jmethod、jclass进行调用

-

通过Java native函数传入的类在C++层均为jobject

-

通过

env->GetObjectClass(object)得到object对应的class -

通过

env->GetMethodID方法得到想调用的方法名-

注意:

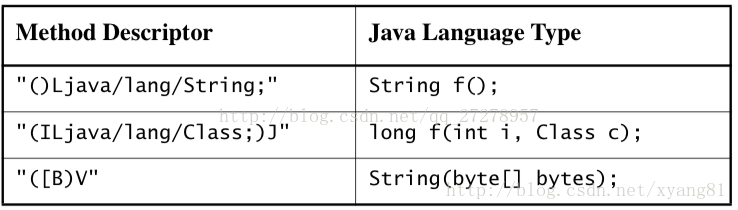

jmethodID GetMethodID(jclass clazz, const char* name, const char* sig)最后一个为sig,需要描述函数的参数与返回值。 -

格式为

([params;])L返回类型-

基本类型

Java Native Signature byte jbyte B char jchar C double jdouble D float jfloat F int jint I short jshort S long jlong J boolean jboolean Z void void V -

引用数据类型

Java Native Signature 所有对象 jobject L+classname +; Class jclass Ljava/lang/Class; String jstring Ljava/lang/String; Throwable jthrowable Ljava/lang/Throwable; Object[] jobjectArray [L+classname +; byte[] jbyteArray [B char[] jcharArray [C double[] jdoubleArray [D float[] jfloatArray [F int[] jintArray [I short[] jshortArrsy [S long[] jlongArray [J boolean[] jbooleanArray [Z - 一些例子

- 如果自己实在不知道怎么写,可以使用javac得到.java的class文件,再通过

javap -s -p得到descriptor

-

-

-

通过call进行调用

env->CallXXXMethod调用,静态类/方法直接传入Class,非静态类/方法传入实现类(一般为Java层传入的jobject)